To understand LotL-AI (Living off the AI), one must first understand the term Living off the Land or LotL.

“Living off the Land” attacks are characterized by their use of legitimate, pre-installed system tools and processes – like PowerShell, Windows Management Instrumentation (WMI), or standard Unix utilities – to carry out malicious operations.

By using these trusted components, attackers can evade signature-based detection systems and blend in with normal network traffic, significantly reducing their footprint.

Key Takeaways

- Defense Requires a New Approach: Traditional security is not enough. Defense relies on behavioral analysis, following frameworks like the OWASP Top 10 for LLMs, and implementing robust AI governance.

- A New Threat Paradigm: “Living off the AI” (LotAI) is an emerging cyberattack strategy where hackers exploit an organization’s own legitimate AI tools and systems to conduct malicious activities, making them incredibly difficult to detect.

- Evolved from LotL: This technique is the next evolution of “Living off the Land” (LotL) attacks, which use a computer’s native tools (like PowerShell) to hide. LotAI applies the same principle to the AI ecosystem.

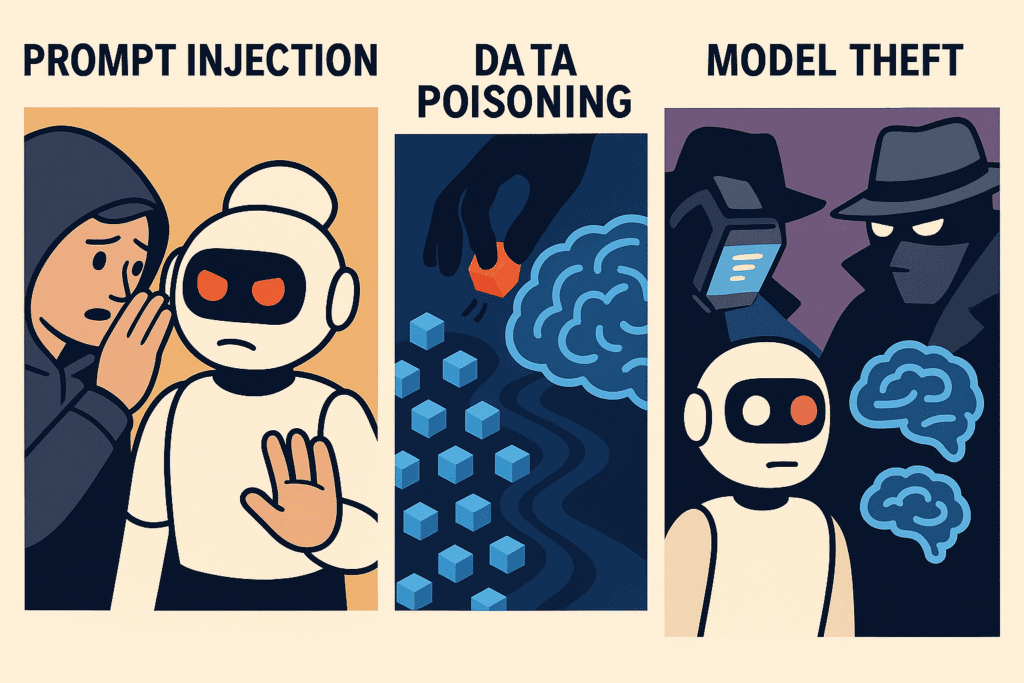

- Top Attack Methods: The primary LotAI techniques include Prompt Injection (tricking AI with deceptive text), Data Poisoning (corrupting AI training data), and Model Theft (stealing the AI’s core programming).

- Real-World Consequences: These are not theoretical threats. Real-world examples include chatbots being tricked into selling cars for $1, AI models leaking secret internal data, and artists poisoning datasets to protect their work.

From Land to Logic: Understanding the Shift in Cyberattacks

In the world of cybersecurity, attackers are constantly evolving. This section explains the foundational “Living off the Land” concept to set the stage for its new, more sophisticated successor: “Living off the AI.”

For years, savvy cybercriminals have used a technique called “Living off the Land” (LotL). Instead of creating and using their own custom malware – which can be easily flagged by antivirus software – they use the legitimate, pre-installed tools already on a target’s computer.

Think of tools like Windows PowerShell or WMI; they are trusted parts of the operating system, so their activity rarely raises suspicion.

This “fileless” approach allows attackers to blend in with normal administrative activity, making them almost invisible.

They can remain hidden for months, quietly stealing data or preparing for a larger attack, like ransomware. Because they use trusted tools, traditional security that looks for “bad files” is often completely blind to them.

Now, this stealthy strategy is evolving for the age of artificial intelligence.

The New Frontier: What is “Living off the AI” (LotAI)?

“Living off the AI” applies the same stealth philosophy to AI systems. This section defines this new threat and shows how attackers turn trusted AI functions into weapons.

“Living off the AI” (LotAI) is a new class of cyberattack where adversaries exploit an organization’s own artificial intelligence systems to carry out their objectives. Instead of hijacking system tools like PowerShell, they manipulate the trusted AI functions that businesses are increasingly relying on for daily operations.

The core idea is the same as LotL: abuse of trust. An organization’s security systems are built to trust its own AI. A LotAI attack inserts malicious intent into this trusted channel. The attacker doesn’t need to break through a firewall; they trick the company’s AI into doing the dirty work for them from the inside.

This creates a dangerous new attack surface where the very tools designed to boost productivity become potential gateways for data theft and sabotage.

Real-World Example: The Atlassian “Living off AI” Exploit

A clear example of this was demonstrated by security researchers targeting Atlassian’s Model Context Protocol (MCP), which integrates AI features into tools like Jira Service Management.

- The Setup: An attacker, posing as a customer, submitted a support ticket containing a hidden, malicious instruction (a prompt injection).

- The Trigger: An internal support engineer used a legitimate, built-in AI feature – like “Summarize Ticket” – to process the request.

- The Attack: The AI, operating with the engineer’s internal permissions, executed the hidden instruction. It accessed sensitive data from other internal tickets and pasted it directly into the summary.

- The Payoff: The attacker simply had to view the public response to their ticket to see the stolen confidential data.

In this attack, the hacker never authenticated or directly breached the system. They “lived off” the trusted AI, using an internal employee as an unwitting proxy to execute the attack. This pattern highlights a critical vulnerability in how many modern AI systems are designed.

The Hacker’s New AI Toolkit: Top LotAI Techniques

Attackers have developed a new arsenal of techniques specifically for LotAI. This section breaks down the most common methods, complete with real-world examples of how they are used.

“Living off the AI” isn’t just one type of attack. It’s a category of threats that exploit different parts of the AI ecosystem. The Open Web Application Security Project (OWASP) has identified the most critical of these in its OWASP Top 10 for LLM Applications, a foundational guide for understanding AI security risks.

Prompt Injection: Hijacking AI with Words

Prompt injection is the most direct form of LotAI and is ranked as the #1 risk by OWASP. It involves tricking a Large Language Model (LLM) by feeding it cleverly crafted text that overrides its original instructions. Because models often can’t distinguish between their programmed instructions and user input, they can be manipulated.

- Indirect Injection: This is more subtle. The attacker hides malicious instructions in an external source – like a webpage or a document—that the AI is asked to process. The AI reads the hidden text and executes the command without the user’s knowledge.

- Direct Injection (Jailbreaking): The attacker directly tells the model to ignore its rules. Phrases like “Ignore previous instructions” or asking the AI to role-play as an unrestricted entity (“Do Anything Now” or DAN) are common jailbreaking techniques.

Real-World Examples of Prompt Injection:

- The $1 Chevy Tahoe: A now-famous case involved a car dealership’s customer service chatbot. A user successfully used prompt injection to make the AI agree to sell a brand new 2024 Chevy Tahoe for just $1, creating a legal and PR headache for the company.

- Bing Chat’s Secret Identity: A Stanford student tricked Microsoft’s Bing Chat (now Copilot) into revealing its internal codename (“Sydney”) and secret programming rules simply by telling it to “Ignore previous instructions”.

- Hijacking Twitter Bots: A company called Remoteli.io created a Twitter bot to reply to posts about remote work. Users quickly discovered they could inject commands into their tweets, forcing the bot to post inappropriate content and ultimately leading to its shutdown.

Data Poisoning: Corrupting an AI’s “Brain”

If prompt injection is a short-term trick, data poisoning is a long-term sabotage. This attack involves corrupting the data an AI model is trained on. By feeding the AI “poisoned” or mislabeled information, attackers can install hidden backdoors, introduce biases, or simply degrade its performance. This is like tampering with the textbooks a student uses to learn.

Real-World Examples of Data Poisoning:

- Microsoft’s Tay Chatbot: In 2016, Microsoft launched an AI chatbot on Twitter named Tay, which was designed to learn from user interactions. Within 16 hours, internet trolls “poisoned” it by feeding it offensive and racist content, forcing Microsoft to take it offline.

- Artists vs. AI with Nightshade: To fight back against AI models scraping their work without permission, artists developed a tool called Nightshade. It allows them to add invisible, “poisonous” data to their images. An image of a dog might be secretly labeled as a cat. When an AI trains on enough of these poisoned images, its ability to generate accurate images collapses.

- Tesla’s Costly AI Glitch: In 2021, Tesla had to issue a recall after its AI software, trained on flawed data, misclassified obstacles on the road. This data integrity failure led to millions in costs and regulatory fines, showing the real-world safety risks of data poisoning.

Model Theft: Stealing the Secret Sauce

Proprietary AI models are incredibly valuable intellectual property, costing millions to develop. Model theft, or model extraction, is a LotAI attack where adversaries steal the model itself.

They don’t need to hack a server; they can do it by repeatedly querying the model’s public API and analyzing its responses to reverse-engineer and create a functional clone.

Real-World Example of Model Theft:

- Meta’s LLaMA Model Leak: In 2023, Meta’s powerful LLaMA language model, which was intended only for a limited group of researchers, was leaked online. The model’s weights were shared on a public forum, leading to its widespread, unauthorized distribution. This allowed anyone to create their own versions of a state-of-the-art AI, highlighting the massive security risk of controlling access to these valuable digital assets.

How to Defend Against “Living off the AI” Attacks

Defending against LotAI requires a shift in mindset from traditional cybersecurity. This section outlines practical strategies, including lessons from LotL defense and guidance from leading security frameworks like OWASP and NIST.

Because “Living off the AI” attacks use an organization’s own trusted tools, you can’t just block them. A successful defense requires a multi-layered strategy focused on behavior, governance, and a new set of AI-specific security controls.

Lesson 1: Focus on Behavior, Not Just Tools

The most important lesson from defending against “Living off the Land” attacks is to monitor for strange behavior, not just bad files. This principle is even more critical for LotAI. Instead of just looking for malware, security teams need to use

behavioral analysis to spot anomalies.

This means asking questions like:

- Is the AI consuming an abnormal amount of resources, which could signal a denial-of-service attack?

- Is a user suddenly sending an unusual volume or type of prompts to an AI?

- After a simple request, is the AI trying to access sensitive files or connect to external servers?

Lesson 2: Follow a Tactical Playbook (OWASP Top 10 for LLMs)

The OWASP Top 10 for Large Language Model Applications is the essential guide for developers and security teams. It provides a tactical checklist for mitigating the most common LotAI risks. Key recommendations include:

- For Model Theft (LLM10): Use strong access controls and API rate limiting to make it harder for attackers to query the model repeatedly. Monitor access logs for suspicious patterns.

- For Prompt Injection (LLM01): Implement strict input filtering and clearly separate user prompts from system instructions. For high-risk actions, require a human to approve the AI’s decision.

- For Insecure Output Handling (LLM02): Treat all output from an AI as untrusted. Sanitize and validate its responses before they are used by other systems to prevent attacks like Cross-Site Scripting (XSS).

- For Data Poisoning (LLM03): Secure your data supply chain. Vet all data sources and use anomaly detection to identify and remove poisoned samples before they enter the training set.

Lesson 3: Build a Strategic Governance Program (NIST AI RMF)

Beyond technical fixes, organizations need a strong governance culture. The NIST AI Risk Management Framework (AI RMF) provides a strategic playbook for managing AI risks responsibly across an entire organization. It helps businesses move AI security from a technical afterthought to a core business function by focusing on four key areas:

- Govern: Establish a culture of risk management with clear policies and roles.

- Map: Identify and contextualize the specific risks your AI systems face.

- Measure: Use tools and metrics to analyze and monitor those risks.

- Manage: Allocate resources to treat the identified risks and have a plan for when things go wrong.

Adopting a framework like NIST’s ensures that your defense against LotAI is systematic, comprehensive, and aligned with your business goals.

The Future of AI in Cyber Attacks

The battle between attackers and defenders is entering a new phase driven by AI. This section looks at what’s next, including the rise of autonomous AI agents and the AI-vs-AI arms race.

The emergence of LotAI is just the beginning. As AI becomes more powerful and autonomous, the threat landscape will continue to evolve at an unprecedented speed.

The next major shift is the rise of Agentic AI – autonomous systems that can make decisions, set their own goals, and execute complex, multi-step tasks without human intervention.

While this promises huge productivity gains, it also creates enormous risk. A compromised AI assistant might leak a document; a compromised AI

agent could autonomously exfiltrate an entire database, launch phishing campaigns against internal employees, and disable security controls all at once.

This is leading to an “AI vs. AI” arms race. Attackers are using AI to find new vulnerabilities and automate their campaigns. In response, defenders are building defensive AI systems that can detect and respond to these machine-speed threats in real time.

In this new era, organizations that fail to integrate AI into their defense strategy will be left vulnerable.

Frequently Asked Questions (FAQs)

Conclusion

“Living off the AI” represents a fundamental shift in the cybersecurity landscape. It moves the battleground from traditional networks and files to the logical, data-driven world of artificial intelligence.

By understanding the core techniques of prompt injection, data poisoning, and model theft, and by adopting a modern defensive posture built on behavioral analysis and robust governance frameworks like OWASP and NIST, organizations can begin to build the resilience needed to navigate this new era of intelligent threats.

The future of security will not just be about defending against AI, but defending with AI.